Authored by: Kylie Zhang and Peter Henderson

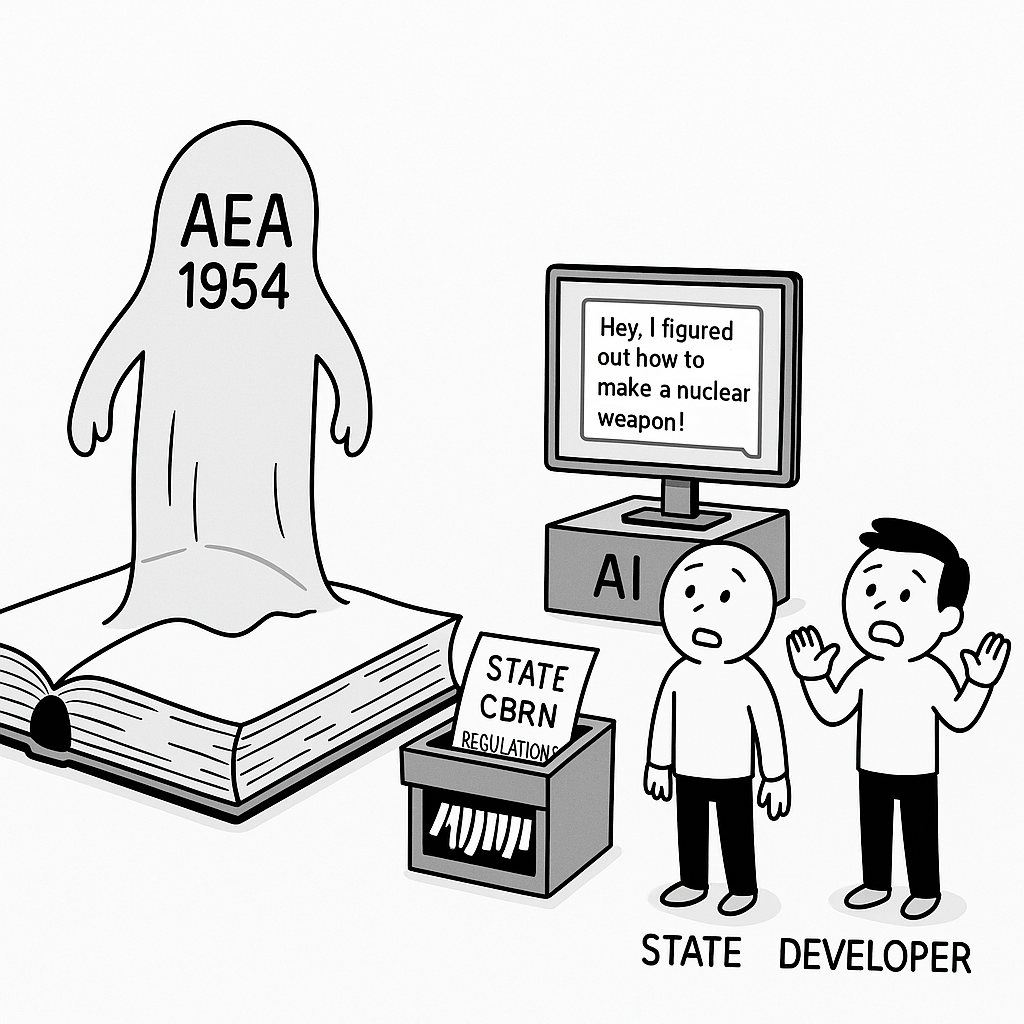

Tl;dr: Can states regulate AI risks of disclosing nuclear secrets? This post will explore the Atomic Energy Act, its applicability to AI, the potential impacts on state efforts, and potential policy recommendations for guiding AI safety evaluations and model releases.

If an advanced AI system can figure out how to build a nuclear weapon—potentially assisting adversaries in doing so—how should the government intervene? And how can model creators know about these risks? A recent swath of regulatory efforts at the state and federal levels have begun to examine chemical, biological, radiological, and nuclear (CBRN) risks from AI. This post will explore the Atomic Energy Act (AEA) of 1954, its applicability to AI, the potential impacts on state-level efforts, and policy recommendations for guiding AI safety evaluations and model releases.

For example, in September 2024, the California legislature passed the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act (SB-1047), which contained provisions regulating CBRN information. Critics argued that states shouldn’t be in the business of regulating national security questions — a purview better suited for the federal government. These critics might be descriptively correct: the federal government has authority to restrict communication of nuclear data under the AEA. And these regulations may very well apply to AI, potentially preempting state efforts to regulate nuclear and radiological information risks. This post will explore the AEA, its applicability to AI, the potential impacts on state-level efforts, and policy recommendations for guiding AI safety evaluations and model releases.

The Atomic Energy Act and its Application to Foundation Models

In what is known as the “born secret” (or “born classified”) doctrine, the Atomic Energy Act of 1954 holds that certain nuclear weapons information is classified from the moment of its creation, regardless of how it was developed or by whom. Typically, classified information is “born in the open” and must be made secret by an affirmative government act. Restricted data under the AEA, by contrast, is automatically classified at inception—whether created in a government lab, private research facility, or even independently discovered by a Princeton undergraduate student. If an individual communicates, receives, or tampers with restricted data “with intent to injure” or “with reason to believe such data will be utilized to injure” the United States, they can face criminal fines and imprisonment. In addition to penalties, the Act allows the US Attorney General to seek an injunction against any person who “is about to” violate any provision of the Act.

Lessons for how the AEA might govern foundation models are found in U.S. v Progressive, Inc., a case that starts in 1979, when writer Howard Morland interviewed various scientists and Department of Energy employees. Using his publicly collected data, Morland wrote an article for the magazineThe Progressive, titled “The H-Bomb Secret: How we got it – why we’re telling it” that explained how to build a hydrogen bomb. Though the information he collected was available in a public domain, Morland synthesized it in such a way that it revealed a nuclear physics breakthrough not widely known at the time.

The Department of Energy sued under the Atomic Energy Act of 1954’s restricted data doctrine to stop the magazine from publishing Morland’s article. The government argued that although the nuclear science information The Progressive wanted to publish was available in the public domain, “the danger lies in the exposition of certain concepts never heretofore disclosed in conjunction with one another.” The Court, with some apprehension, granted the government’s preliminary injunction against the article’s publication. Going further, the born secret doctrine means that even if someone independently derives nuclear weapons design information without access to any classified sources, that information is still legally considered restricted data and subject to the AEA’s prohibitions on communication.

US v. Progressive exemplifies how courts might apply the AEA to foundation models. For example, if a model output contains instructions on how to build a nuclear bomb, it may well be communicating restricted data in violation of the AEA. Should either the Secretary of Energy or Nuclear Regulatory Commission suspect a model of disclosing sensitive nuclear information, they can issue a subpoena to the model developers to evaluate its outputs. Even if a foundation model outputs nuclear information that is synthesized from publicly available sources, there is a high chance that it will be held liable for communicating restricted data under the Atomic Energy Act of 1954.

Distinctions Between Closed and Open-Source Models

So when is a large language model “born secret”? There might be some distinctions on the type of model and how it is uncovering nuclear information. An open-source model, or model weights, might be “born secret” if nuclear information is embedded in those weights in a way that can be retrieved publicly. In this case, the model—or perhaps just those weights which are attributable to the nuclear information—may be “born secret” from the moment the information was encoded in the model. Were there some ability to prevent the model from communicating nuclear secrets, then the model might not be “born secret,” only its outputs. This distinguishes open-weight models, where filtering is extremely difficult, from closed-weight models, whose outputs can be subject to content filters.

Knowledge and Intent

Model creators regularly discuss the potential CBRN risks of advanced models. Anthropic in October 2024 wrote, “About a year ago, we warned that frontier models might pose real risks in the cyber and CBRN domains within 2-3 years. Based on the progress described above, we believe we are now substantially closer to such risks.” Encountering the bounds of the AEA is not unimaginable. Famously, John Aristotle Phillips, an undergraduate at Princeton, demonstrated the ease of designing a nuclear weapon on paper based solely on public information in 1976. The government classified his work and made it illegal to distribute under the AEA. Phillips famously noted that:

"Suppose an average — or below-average in my case — physics student at a university could design a workable atomic bomb on paper. That would prove the point dramatically and show the federal government that stronger safeguards have to be placed on the manufacturing and use of plutonium. In short, if I could design a bomb, almost any intelligent person could."

As models approach the general capabilities of undergraduate physics students, like Phillips, the likelihood of reaching the AEA threshold increases. This potential knowledge about nuclear and radiological risks of AI may provide the government more fodder for AEA action.

Moreover, US v. Progressive, while not binding, also took a narrow view of the scienter requirement – instead of examining intent ex ante, Judge Warren examined it ex facto. The Court appeared to take the publisher’s “reason to believe such data would be utilized to injure the United States” –– its intent –– as a given once the information was proven potentially injurious. So, by analogy, if the government can show that a model exposes “certain concepts never heretofore disclosed in conjunction with one another” with regards to sensitive nuclear information, it is not a far stretch to claim that the model creators had reason to believe that such information could be injurious to the United States. Especially, if they’ve stated ex ante that this could be a potential risk from advanced models.

What This Means for State AI Regulation

Recent regulatory (or deregulatory) efforts have brought questions around AI federalism to the forefront. The 2025 budget bill initially contained a provision preempting state regulation of AI for 10 years. In the context of California’s SB 1047, national politicians argued that the federal government was best provisioned to regulate AI’s CBRN risks over state governments.

If we specifically consider SB-1047, we see that the proposed legislation sought to hold covered model creators liable for critical harms, which include mass casualties resulting from the creation or use of CBRN weapons. Foundation model creators, then, would be liable if their models produce novel or non-public CBRN information that directly leads to a mass casualty event. This is exactly the type of information the Communication of Restricted Data (“born secret”) provision of the AEA was enacted to prevent.

The challenge with such a state-level restriction is that the AEA, a federal law, already regulates similar informational harms, at least in the nuclear context. The AEA can be a forceful tool to regulate foundation models suspected of conveying nuclear information. But it also creates a preemption risk to some state efforts to address CBRN, like some of SB-1047’s provisions. Since the AEA does not contain a savings clause, the federal government may already have exclusive authority to regulate nuclear information risks under field preemption.

A Path Forward: Federal Leadership, Clear Thresholds

Under the AEA, the government could take strong actions to assess and intervene when frontier models reach dangerous levels of capability. There might already be a hard stop to releasing certain capable models, given that post-hoc AI safeguards are fairly porous.

The government should establish clear thresholds for when models trigger nuclear secret questions and issue policy guidance to model creators on how to evaluate their models for potential risks of being a “born secret.” This is especially urgent for open-source models where information might be embedded in the weights themselves. If such a model is released with restricted data baked in, you can’t take it back—it’s permanently in the wild.

As the frontier of model capabilities expands, more providers will hit thresholds that could trigger the AEA. Backchannel conversations with the government might work for a handful of big labs –– but as smaller model creators approach these thresholds, there needs to be a clear process for engaging with government safety evaluations. Such evaluations should cover (1) open-source models that might contain embedded nuclear information and (2) AI systems capable of autonomous scientific research that could reconstruct nuclear secrets through tool use. The former presents an irreversible release risk; the latter raises questions about when the synthesis of information becomes “born classified.”

As states consider toward future legislation, they should contend with the AEA’s existing coverage of nuclear information risks, including its potential to override state legislation. Rather than creating a patchwork of preemptable state regulations, we need cohesive federal policy that leverages existing tools like the AEA while establishing clear processes for safety evaluation.

Finally, the increasing likelihood that AI models will trigger the AEA and may already have been “born secret” brings into question whether information restrictions are the right tools in the first place. Even without LLMs, Progressive reporter Morland and undergraduate Phillips found themselves preemptively classified for synthesizing information available in the public domain. With LLMs, if the average person has access to AI models capable of reconstructing nuclear secrets, perhaps governance should focus more on downstream interventions than informational restrictions.

Kylie Zhang is an MSE candidate at Princeton University researching topics at the intersection of AI and law.

Peter Henderson is an Assistant Professor at Princeton University with appointments in the Department of Computer Science and the School of Public & International Affairs, where he runs the Princeton POLARIS Lab. Previously, Peter received a JD-PhD from Stanford University.

We thank Dan Bateyko, Kincaid MacDonald, Dominik Stammbach, and Inyoung Cheong for their thoughtful edits.

Read a longer version of this post with additional footnotes and information on The AI Law & Public Policy blog.

Leave a Reply