[This is the second post in a series. The other posts are here: 1 3 4]

Yesterday, I wrote about the AI Singularity, and why it won’t be a literal singularity, that is, why the growth rate won’t literally become infinite. So if the Singularity won’t be a literal singularity, what will it be?

Recall that the Singularity theory is basically a claim about the growth rate of machine intelligence. Having ruled out the possibility of faster-than-exponential growth, the obvious hypothesis is exponential growth.

Exponential growth doesn’t imply that any “explosion” will occur. For example, my notional savings account paying 1% interest will grow exponentially but I will not experience a “wealth explosion” that suddenly makes me unimaginably rich.

But what if the growth rate of the exponential is much higher? Will that lead to an explosion?

The best historical analogy we have is Moore’s Law. Over the past several decades computing power has growth exponentially at a 60% annual rate–or a doubling time of 18 months–leading to a roughly ten-billion-fold improvement. That has been a big deal, but it has not fundamentally changed the nature of human existence. The effect of that growth on society and the economy has been more gradual.

The reason that a ten-billion-fold improvement in computing has not made us ten billion times happier is obvious: computing power is not something we value deeply for its own sake. For computing power to make us happier, we have to find ways to use computing to improve the things we do care mostly deeply about–and that isn’t easy.

More to the point, efforts to turn computing power into happiness all seem to have sharply diminishing returns. For example, each new doubling in computing power can be used to improve human health, by finding new drugs, better evaluating medical treatments, or applying health interventions more efficiently. The net result is that health improvement is more like my savings account than like Moore’s Law.

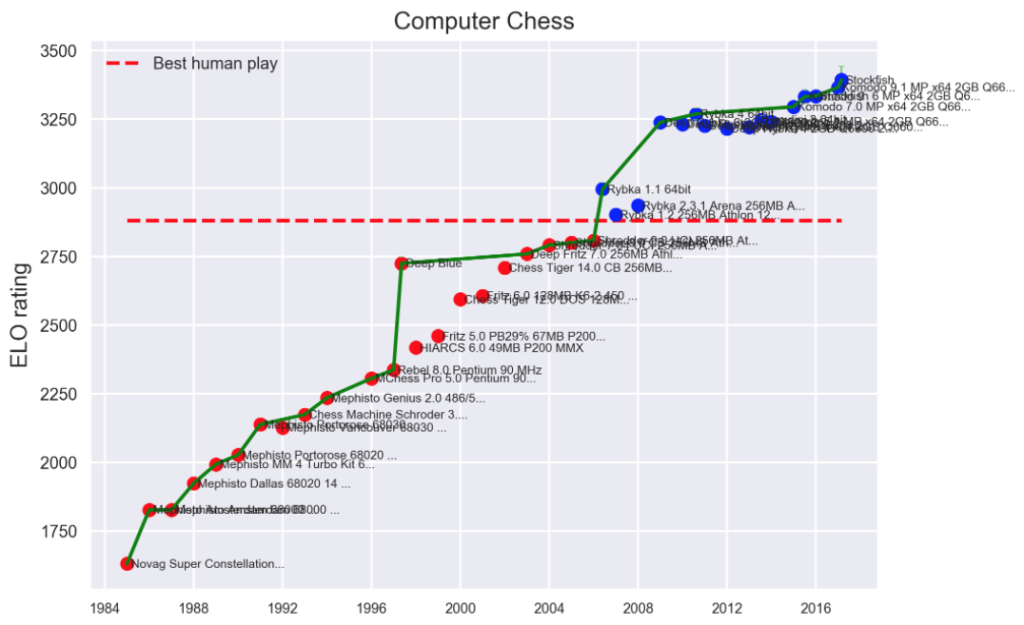

Here’s an example from AI. The graph below shows improvement in computer chess performance from the 1980s up to the present. The vertical axis shows Elo rating, the natural measure of chess-playing skill, which is defined so that if A is 100 Elo points above B, then A is expected to beat B 64% of the time. (source: EFF)

The result is remarkably linear over more than 30 years, despite exponential growth in underlying computing capacity and similar exponential growth in algorithm performance. Apparently, rapid exponential improvements in the inputs to AI chess-playing lead to merely linear improvement in the natural measure of output.

What does this imply for the Singularity theory? Consider the core of the intelligence explosion claim. Quoting Good’s classic paper:

… an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ …

What if “designing even better machines” is like chess, in that exponential improvements in the input (intelligence of a machine) lead to merely linear improvements in the output (that machine’s performance at designing other machines)? If that were the case, there would be no intelligence explosion. Indeed, the growth of machine intelligence would be barely more than linear. (For the mathematically inclined: if we assume the derivative of intelligence is proportional to log(intelligence), then intelligence at time T will grow like T log(T), barely more than linear in T.)

Is designing new machines like chess in this way? We can’t know for sure. It’s a question in computational complexity theory, which is basically the study of how much more of some goal can be achieved as computational resources increase. Having studied complexity theory more deeply than most humans, I find it very plausible that machine design will exhibit the kind of diminishing returns we see in chess. Regardless, this possibility does cast real doubt on Good’s claim that self-improvement leads “unquestionably” to explosion.

So Singularity theorists have the burden of proof to explain why machine design can exhibit the kind of feedback loop that would be needed to cause an intelligence explosion.

In the next post, we’ll look at another challenge faced by Singularity theorists: they have to explain, consistently with their other claims, why the Singularity hasn’t happened already.

[Update (Jan. 8, 2018): The next post responds to some of the comments on this one, and gives more detail on how to measure intelligence in chess and other domains. I’ll get to that other challenge to Singularity theorists in a subsequent post.]

Leave a Reply