Authored by: Mihir Kshirsagar

This week, Open AI announced a multibillion dollar deal with Broadcom to develop custom AI chips for data centers projected to consume 10 gigawatts of power. This investment is separate from another multibillion dollar deal OpenAI struck with AMD last week. There is no question that we are in the midst of making one of the largest industrial infrastructure bets in United States history. Eight major companies—Microsoft, Amazon, Google, Meta, Oracle, OpenAI, and others—are expected to invest over $300 billion in AI infrastructure in 2025 alone. Spurred by news about the vendor-financed structure of the AMD investment and a conversation with my colleague Arvind Narayanan, I started to investigate the unit economics of the industry from a competition perspective.

What I have found so far is surprising. It appears that we’re making important decisions about who gets to compete in AI based on financial assumptions that may be systematically overstating the long-run sustainability of the industry by a factor of two. That said, I am open to being wrong in my analysis and welcome corrections as I write these thoughts up in an academic article with my colleague Felix Chen.

Here is the puzzle: the chips at the heart of the infrastructure buildout have a useful lifespan of one to three years due to rapid technological obsolescence and physical wear, but companies depreciate them over five to six years. In other words, they spread out the cost of their massive capital investments over a longer period than the facts warrant—what The Economist has referred to as the “$4trn accounting puzzle at the heart of the AI cloud.”

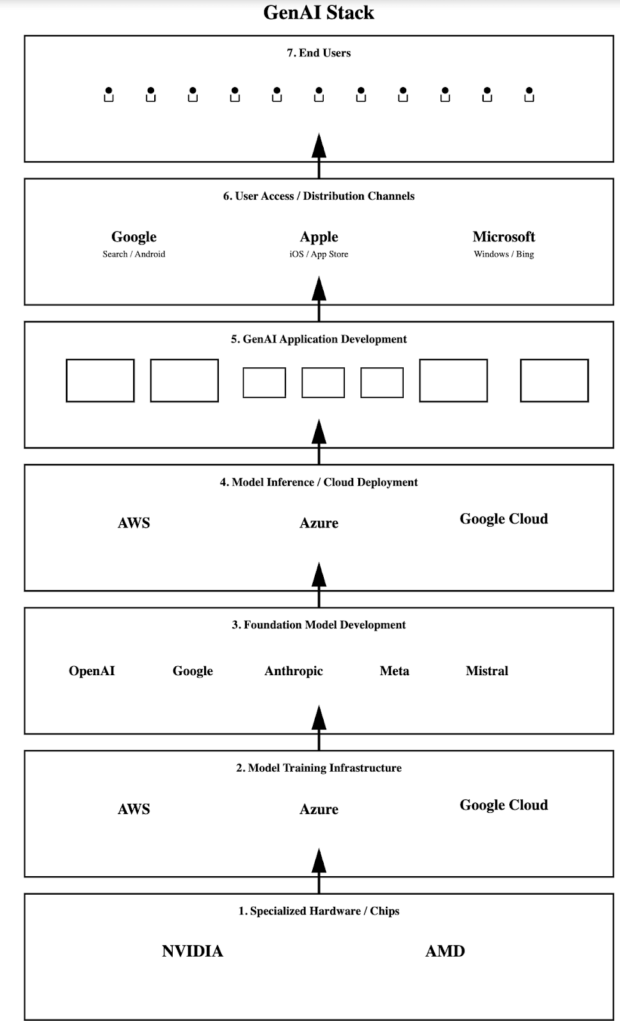

This accounting mismatch has significant competition implications, especially upstream of the model layer of the GenAI stack. (Figure 1.) In the application layer enterprises choose AI models to integrate into their operations. Incumbent coalitions—hyperscalers (Microsoft, Amazon, Google) partnered with their model developers (OpenAI, Anthropic)—can effectively subsidize application-layer pricing during the critical years when customer relationships are being formed. This could create advantages that prove insurmountable even when better technology becomes available.

The core issue isn’t whether chips wear out faster than accounting suggests. It’s whether the market structure being formed is fueled by accounting conventions that obscure true long-run economics, allowing incumbent coalitions to establish customer lock-in at the application layer before true costs become visible. In other words, the accounting subsidy creates a window of roughly three to six years where reported costs are artificially low. After that, the overlapping depreciation schedules catch up to operational reality. Here’s why this timing matters: that three-to-six-year window is precisely when the market structure for AI applications is being determined. Customer relationships are being formed. Enterprise integrations are being built. Multi-year contracts are being signed. Switching costs are accumulating. By the time the accounting catches up—when companies face the full weight of replacement costs hitting their income statements—the incumbent coalitions will have already locked in their customer base. The temporary subsidy enables permanent competitive advantage.

Even if we ignore the accounting question, a September 2025 Bain report estimates that AI firms will face an annual $800 billion revenue hole in 2030 to fund their capital expenses—a gap larger than Amazon’s entire annual revenue. Per my calculations, this hole grows to over $1.5 trillion annually if the true total cost of ownership is taken into account. (For reference, that is roughly equivalent to the size of the global foodservice industry.) Even assuming these self-reported figures are inflated by 50%, the revenue challenge remains unprecedented in scale.

If these coalitions establish durable customer relationships in the initial years, the market structure crystallized during this window may persist regardless of whether the underlying investment economics are sustainable at true replacement costs. The lessons from the efforts to unwind Google’s dominance of the search market are instructive here.

The Evidence on Useful Life

Technical analyses have converged on estimating the useful lifespan of AI chips at one to three years. One unnamed Google architect assessed that GPUs running at 60-70% utilization—standard for AI workloads—survive one to two years, with three years as a maximum. The reason: thermal and electrical stress is simply too high.

But physical failure isn’t the only concern. Technological obsolescence drives replacement cycles. Nvidia’s GB200 (“Blackwell”) chip provides 4-5x faster inference than the H100. When competitors deploy hardware with significantly better performance, three-year-old chips become economically obsolete even if they still function.

Investment analysts have run the numbers on what happens if accounting catches up to reality. Barclays, for example, is cutting earnings forecasts of AI firms by up to 10% for 2025 to account for more realistic depreciation assumptions.

The physical evidence, the technological trajectory, and the emerging financial data all point in the same direction: actual useful life is at least half the accounting life, and this does not even account for the significant operational expenditures required.

Understanding the Competitive Landscape

The AI market isn’t a collection of independent companies—it’s organized around a small number of incumbent coalitions:

- Microsoft-OpenAI: Microsoft invested ~$13 billion in OpenAI and provides exclusive Azure infrastructure. Microsoft sells “Azure OpenAI Service” with deep enterprise integration and benefits from OpenAI’s success through Azure consumption.

- Amazon-Anthropic: Amazon invested ~$4 billion in Anthropic with AWS Bedrock integration. Anthropic runs primarily on AWS infrastructure.

- Google: Google Cloud partnership with Anthropic (~$2 billion investment) plus Google’s own Gemini models integrated across Google Workspace and Cloud. Google also acquired DeepMind, a research and development lab that develops many new applications.

- Meta: Owns its infrastructure, develops the Llama open-weight models, and controls distribution to 3+ billion users. It does not primarily compete for enterprise API customers, but uses AI extensively in its core business.

These aren’t loose partnerships—they’re deeply integrated coalitions where the hyperscaler’s infrastructure economics directly enable their coalition’s competitive positioning at the application layer.

True outsiders trying to compete at the application layer include companies like Cohere (enterprise-focused models), AI21 Labs, Mistral, and application builders like Harvey (legal AI), Glean (enterprise search), and Writer (enterprise content). Many have struggled to scale or ended up partnering with hyperscalers—Mistral now has a partnership with Microsoft, and Cohere partners with Oracle. The emerging pattern suggests that coalition membership is becoming necessary for survival.

How the Subsidy Flows Through Partnerships

The accounting subsidy creates competitive advantages that flow through the coalition structure. A recent McKinsey analysis projects that approximately 60% of AI infrastructure spending in the next five years goes to computing hardware (chips, servers, memory), 25% to power and cooling infrastructure, and 15% to physical construction. Assuming half of the infrastructure spending goes to computing hardware with 1-3 year useful life: companies can depreciate this hardware over 5-6 years rather than the realistic 1-3 years. This effectively halves their reported annual depreciation expense. This cushion between reported costs and actual replacement economics creates a capital subsidy that allows coalition leaders to subsidize application-layer pricing, expand capacity faster than true economics justify, and report profitability metrics that attract capital on more favorable terms than their operational reality warrants.

The Microsoft-OpenAI Example

Microsoft’s Azure AI infrastructure investments, when depreciated over six years rather than the more realistic three years, allow Microsoft to report more attractive margins on its infrastructure business. Consider Microsoft’s ~$80 billion annual AI infrastructure spend. If half goes to computing hardware with a true three-year lifespan, Microsoft faces actual replacement costs of approximately $13 billion per year. But by depreciating over six years, Microsoft’s reported annual depreciation is only $6.5 billion—creating an apparent $6.5 billion annual cushion.

This cushion enables Microsoft to subsidize OpenAI’s infrastructure costs during the critical years when customer relationships are being formed. OpenAI can price its APIs aggressively to win enterprise customers while operating on a cost structure that wouldn’t be sustainable at true market rates for infrastructure. Microsoft benefits because every enterprise customer that integrates OpenAI’s models becomes more deeply embedded in the Azure ecosystem.

The same pattern holds for Amazon-Anthropic. Amazon’s AWS can depreciate AI infrastructure over extended periods, subsidize Anthropic’s infrastructure costs through favorable terms, and benefit from the resulting AWS consumption as Anthropic wins customers.

What This Means for Application-Layer Competition

Incumbent coalitions can:

- Price model APIs aggressively through subsidized infrastructure costs;

- Expand capacity faster than economics would otherwise justify;

- Lock in large volumes through multi-year contracts;

- Build deep integrations into enterprise cloud environments;

- Report profitability that attracts more capital at favorable terms.

New entrants—whether independent model developers or application builders—face steeper economics. Even with access to chips at market rates, they can’t match the pricing of model developers whose infrastructure is subsidized by hyperscaler partners using six-year depreciation. Their profitability looks worse on paper, making it harder to raise capital and compete for customers during the critical window when market structure is being determined.

The strategic bet: By years 4-5, when accounting reality might catch up to operational reality, customer relationships will be locked in through switching costs, integration depth, and organizational inertia.

Three Plausible Scenarios

The durability of incumbent advantages involves some complex projections. Let me lay out three scenarios and examine how the accounting subsidy affects competition in each.

Scenario A: Training Keeps Scaling

If frontier models keep improving meaningfully with more compute, then training capacity matters more than inference efficiency. This is a strong assumption that does not appear supported by current science. Nevertheless, playing out this world, we see that model quality differentiates providers, customers pay premium prices for frontier capabilities, and incumbent coalitions who built massive training capacity have lasting advantages. The moat is capability-based, reinforced by infrastructure scale.

Incumbent coalitions face profitability pressure when accounting catches up to operational reality, but they retain competitive advantages through superior models. New entrants with better unit economics can’t easily compete because the game is about model quality, not inference cost. The capital requirements are enormous and ongoing. Whoever builds scale first maintains advantages.

There is also a policy question about whether private capital can sustain the hundred of billions in annual replacement cycles indefinitely, or whether maintaining leadership requires ongoing policy support once true costs become visible.

Scenario B: Models Plateau, Lock-In Holds

If model capabilities hit diminishing returns and most models become “good enough,” competition shifts to inference efficiency, cost, and building applications. When the market power moves upstream, that creates the most troubling competitive dynamics.

In this world, models plateau in capability, inference costs have fallen dramatically, and open source models match or approach proprietary quality. But incumbent coalitions who built scale in the early years retain advantages through:

- Deep enterprise integrations built during the buildup period;

- Multi-year contracts signed when they had pricing power;

- Compliance certifications and security frameworks built around specific coalition stacks;

- Organizational inertia and switching costs;

- Brand trust and reliability reputation.

New entrants might have better unit economics, but if switching costs are high enough, customers stick. This is where the Google search case becomes the crucial analytical framework.

The Google Search Parallel

The Justice Department’s case against Google in search provides a roadmap for understanding how customer lock-in can persist even when competitive alternatives exist with comparable or better economics. Google maintained search dominance through default placements and integration lock-in. Google paid billions annually to be the default search engine on Safari, Android devices, and elsewhere. These defaults created inertia: users rarely switched even when alternatives were “one click away.” The court found this lock-in was anticompetitive.

The insight for AI infrastructure is that even in a market where switching takes literally a few clicks, default positions and integration created durable advantages that competitors couldn’t overcome with better economics or comparable quality.

Now consider that switching costs are far higher in the AI market:

- Enterprise integrations require months of engineering work;

- Multi-year contracts lock in pricing and commitments;

- Security certifications, compliance frameworks, and operational procedures are built around specific coalition stacks;

- Organizational workflows, training, and tooling are built around specific providers.

In other words, the lock-in is to the entire hyperscaler infrastructure stack.

If Google could maintain dominance in search with relatively low switching costs, how much more durable might AI application-layer lock-in prove when enterprises are deeply integrated into coalition stacks?

Scenario C: Models Plateau, Technology Enables Entry

There is an alternative scenario where the competitive effects could be less severe. Here, models plateau, inference commoditizes, and switching costs prove lower than expected. APIs standardize across providers, models are equally capable, price becomes the primary differentiator, and integration depth matters less than anticipated.

New entrants can now compete effectively on price and service, leveraging newer, more efficient infrastructure. The early years’ investment by incumbents might look wasteful in retrospect. Better technology in newer chips defeats scale advantages on older infrastructure.

For competition, this means there is no permanent foreclosure—just wasteful spending that created temporary advantages and shaped the market structure during a critical period, even though those advantages don’t persist.

Path Forward: Which Scenario Are We In?

The short answer is that we don’t know yet. The available evidence suggests model capabilities have plateaued and inference scaling is the name of the game. As a result, inference costs will fall, but we don’t know how dramatically. Customer lock-in at the application layer might prove durable (like Google search) or weaker than expected.

If we’re in Scenario B (models plateau, enterprise lock-in), the accounting subsidy lets incumbent coalitions foreclose competition at the application layer during the critical window, and switching costs prevent correction even when better alternatives emerge. This is the Google search outcome.

If we’re in Scenario C (models plateau, low switching costs), the asset-life treatment enables wasteful overinvestment that may temporarily distort competition, but it does not create permanent harm to the market structure.

Effect of Technology Deflation

One intuitive consideration is that better chips and algorithms should undermine incumbent advantages over time.

The problem is that technology improvement helps everyone, but it helps incumbents more when lock-in exists. For coalitions with locked-in customers, capability expands through replacement spending alone. They are continuously upgrading infrastructure, and existing customers automatically benefit.

New entrants with better technology have lower unit economics, but they still must acquire customers. This is challenging when customers are already integrated with the OpenAI APIs through Azure, operating under multi-year contracts, built compliance frameworks around the incumbent stack, facing switching costs that exceed savings from better technology, and receiving continuous capability upgrades from their current provider.

The core insight from the Google search case: the technology deflation curve that should enable new entry actually reinforces incumbent advantages when lock-in is strong.

Vendor Financing: The Circular Flow Amplification

The competitive dynamics become more concerning when combined with the circular financing structure at the heart of the AI infrastructure boom. The circular structure reinforces the coalition dynamics and makes it harder for new entrants to compete in the future.

Nvidia has embarked on a strategy of making strategic equity investments in its customers.

CoreWeave is the clearest example. Nvidia owns roughly 7% of the company, a stake worth about $3 billion as of June 2025. CoreWeave has purchased at least 250,000 Nvidia GPUs—mostly H100s at roughly $30,000 each, totaling around $7.5 billion. Essentially, all the money Nvidia invested in CoreWeave has come back as revenue.

In turn, CoreWeave signed $22.4 billion in contracts with OpenAI to provide AI infrastructure. Nvidia participated in OpenAI’s $6.6 billion funding round in October 2024, then announced a $100 billion investment commitment in September 2025.

The circle takes shape: Nvidia invests in OpenAI. OpenAI signs contracts with CoreWeave. CoreWeave buys GPUs from Nvidia. Nvidia has equity in CoreWeave. The money flows in a loop, with each transaction appearing as legitimate revenue or investment depending on which company’s books you examine.

Not many details have been released about the AMD deal with OpenAI, but reports suggest it involves similar features of circular financing.

Lessons from the 2001 Telecom Bust

We’ve seen circular vendor financing disrupt market structures before. During the late 1990s telecom boom, equipment makers financed their own customers to drive sales. Lucent offered $8.1 billion in vendor financing ($15.8 billion in today’s dollars), which represented 24% of revenue. Nortel had $3.1 billion ($6.0 billion today). And Cisco was $2.4 billion ($4.7 billion today).

The strategy: lend money to cash-strapped telecom companies so they could buy your equipment. Everyone wins. You book revenue. Your customers build networks. The market bids up your stock. Repeat.

Until the music stopped.

Forty-seven competitive local exchange carriers went bankrupt between 2000 and 2003. When customers defaulted, vendor financing became bad debt. Lucent wrote off $3.5 billion in customer loans.

Today’s numbers are larger. Nvidia’s disclosed investments and financing commitments total roughly $110 billion against $165 billion in last-twelve-months revenue. That’s 67% of revenue—nearly three times Lucent’s exposure at the peak.

There are crucial differences. First, there are no allegations of fraud or any untoward activity. Second, the competitive implications differ because of asset lifespans. In telecom, assets had long useful lives. When companies went bankrupt, carriers could buy that fiber at fire-sale prices and use it for decades.

The short lifespan of AI chips changes this fundamentally. If the current buildout proves excessive and coalitions face financial pressure, excess capacity won’t be available for new entrants to acquire and deploy effectively. Three-year-old chips at fire-sale prices don’t provide a competitive foundation when incumbent coalitions are running current-generation hardware. Even if the circular financing unwinds, it won’t necessarily enable competitive entry at the application layer.

Regulatory Implications

The evidence shows that the market structure is being determined by competition among 3 coalitions, with no realistic path for true outsiders to compete at the application layer during the critical window when customer relationships are being formed. Competition authorities are starting to look at market concentration in AI, focusing on traditional antitrust metrics: market share, pricing power, exclusionary conduct. As far as I know, the competitive effects of vendor financing and accounting policy have not yet been discussed extensively.

Two policy proposals can help maintain competition. First, enhanced disclosure requirements about capital and operating costs. Any AI infrastructure project receiving government support should disclose realistic useful life assumptions for hardware, expected replacement schedules and costs, total capital requirements under multiple technology scenarios, relationships between vendor financing and equipment procurement, partnership economics showing how infrastructure costs flow from hyperscalers to model developers, and analysis of customer lock-in mechanisms at the application layer.

Second, developing interoperability standards to prevent lock in. Interoperable standards on model APIs and cloud integration frameworks make it easier for enterprises to switch between providers. The Google search remedy focused on reducing switching costs—the same principle applies here.

In summary, the market structure for AI firms is coalescing around models that rely on a combination of massive capital flows, circular financing structures, and accounting assumptions that may not match operational reality. The accounting subsidy enables incumbent coalitions to establish market share in the early years, when critical customer relationships at the application layer are being formed.

The Google search case demonstrates that lock-in can be decisive even with low switching costs. AI application-layer integration has far higher switching costs. As I’ve said, we don’t yet know which scenario we’re in. But the decisions being made now—based on assumptions that may overstate financial sustainability by a factor of two—are determining the future market for AI services at the application level.

Thanks to Arvind Narayanan for innumerable conversations and feedback on a draft, Jacob Shapiro for feedback, and Felix Chen for editorial suggestions and collaboration on a related academic work-in-progress.

Mihir Kshirsagar directs Princeton CITP’s technology policy clinic, where he focuses on how to shape a digital economy that serves the public interest. Drawing on his background as an antitrust and consumer protection litigator, his research examines the consumer impact of digital markets and explores how digital public infrastructure can be designed for public benefit.

Leave a Reply